Contour Integration Benchmark

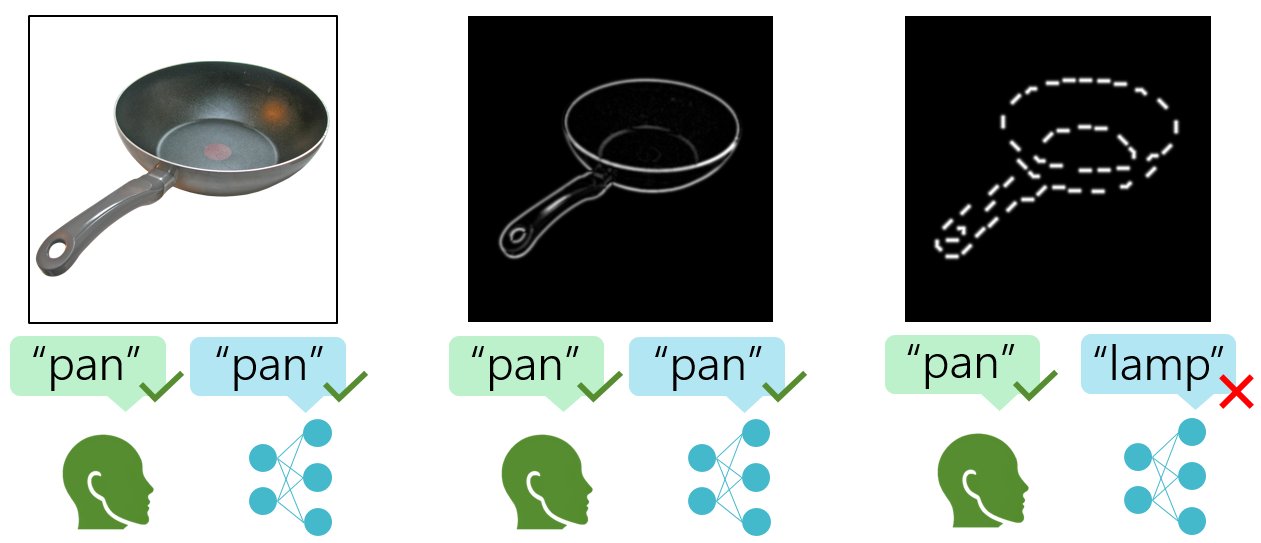

Testing if AI models 'see' fragmented objects like humans do.

The Challenge

Deep learning models excel at many vision tasks but fail to generalize like humans, especially in recognizing fragmented or partially obscured objects. Understanding this gap requires controlled benchmarks.

The Outcome

- Demonstrated that contour integration capability scales with training data size in models.

- Showed that models sharing human-like 'integration bias' perform better and are more robust.

- Established that contour integration is a learnable mechanism linked to shape bias.

- Provided a benchmark for evaluating model generalization and human-likeness.

Key Information

Modalities Used

App Stack

Multimodal Integration

Conceptual: Mixpeek could manage the large datasets of fragmented images, automate model testing pipelines, store results, and facilitate comparison across different model architectures and training runs.

Features Extracted:

Other Technologies

Python

Deep Learning Frameworks (e.g., PyTorch)

Data Analysis Libraries (e.g., Pandas, NumPy)

Benchmarking Tools