🚀 The Rise of the Dataset Engineer

Why the future of AI isn’t about bigger models — it’s about better data.

“Everyone wants to talk about the model. No one wants to talk about the dataset.”

– Basically every ML engineer ever

It’s no secret that AI is booming. New models are dropping every month, some with trillions of parameters, billions of tokens, and wild capabilities. But here’s the part you don’t hear as often:

Most of the heavy lifting in AI happens before (and after) the model is trained.

The unsung hero behind every AI breakthrough?

🧠 The Dataset Engineer.

Let’s unpack why.

🛠️ What Even Is Dataset Engineering?

Dataset engineering is everything that happens to data before it gets fed into a model — and everything that happens after to keep the model useful.

It includes:

- Collecting raw data (text, video, audio, images)

- Cleaning, filtering, labeling

- Deduplicating redundant samples

- Segmenting and structuring it

- Monitoring model failures to generate new training data

It’s not just janitorial work. It’s data strategy — and it makes or breaks the model.

🔍 Think: less “clean this data” and more “what data is worth learning from?”

🎬 Case Study: Cosmos and the 20M-Hour Video Diet

NVIDIA recently trained a massive AI called Cosmos to understand physics… by watching 20 million hours of video.

But here’s the kicker: they didn’t just dump all that raw footage into the model.

Read the paper: https://arxiv.org/pdf/2501.03575

They built a seriously smart pipeline first.

Two tricks they used:

🧩 Shot Boundary Detection

They broke up long videos into logical scenes by detecting when one shot ends and another begins — like cuts in a movie.

→ This gives the model coherent chunks to learn from.

🧠 Semantic Deduplication

They removed semantically similar clips — even if they weren’t byte-for-byte duplicates.

→ Instead of learning the same thing 100 times, the model gets diverse, unique examples.

Result: From 20M hours of footage, they built a clean, diverse, 100M-clip training set. That’s dataset engineering in action.

🚗 Uber, Tesla & the Data-First AI Movement

You don’t need to be NVIDIA to take dataset engineering seriously. Here’s how Uber and Tesla are leading with data.

🚕 Uber: AI That Rides on Data

Uber trains models for ETA prediction, fraud detection, and autonomous driving. Their teams:

- Sync data across LiDAR, GPS, cameras, etc.

- Use Petastorm to structure huge datasets for GPU training

- Built Apache Hudi to make real-time updates to training sets (no full rebuilds needed)

This lets them update models fast with the freshest ride data.

Read their announcement blog: https://www.uber.com/blog/from-predictive-to-generative-ai/

🚘 Tesla: The Infinite Data Loop

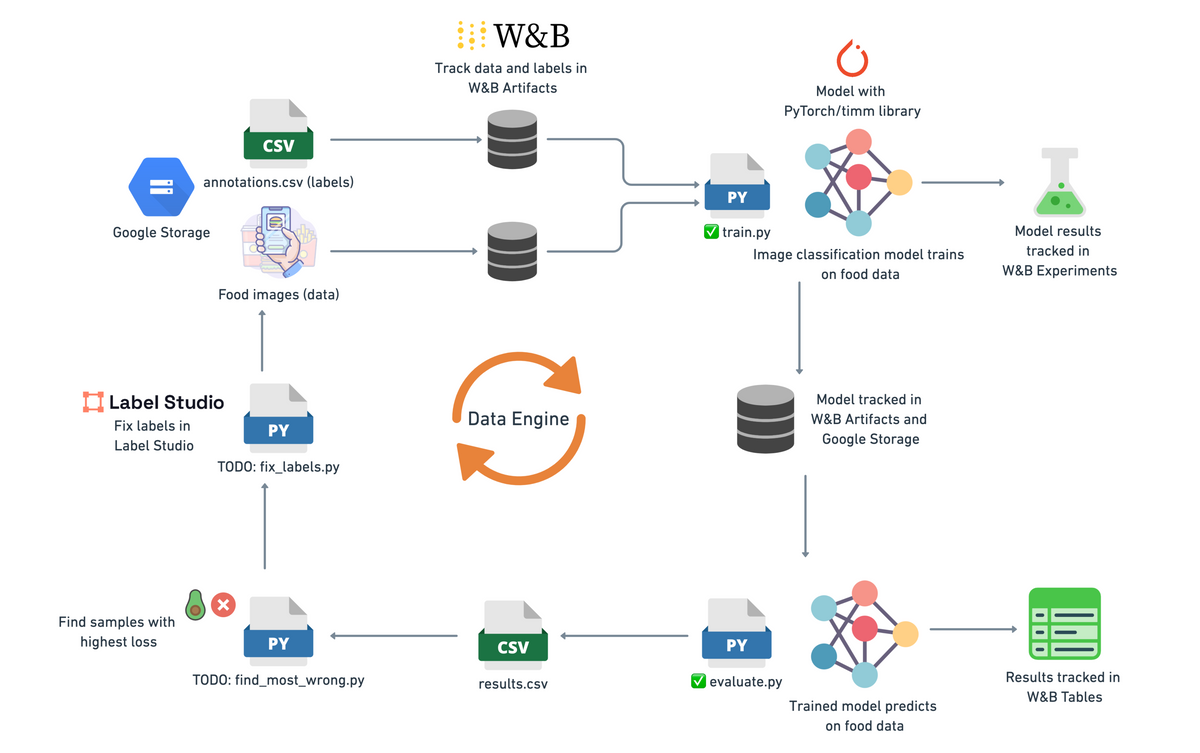

Tesla’s Autopilot team runs a “data engine” — it spots model failures on real roads, searches the fleet for more of those cases, labels them, and retrains.

- Car confused by a bike on a rack?

→ Find 500 more of those, label them, retrain. - Weird new intersection?

→ Add it to the dataset, improve behavior.

This feedback loop is powered by world-class dataset engineering.

📊 Why Models Need Data Curation, Not Just Size

It’s easy to get caught up in model size — GPT-3, Gemini, Claude… we’re talking billions of parameters. But in reality?

📈 Better data often beats bigger models.

Here’s why:

- Data defines what the model can learn

- Diverse and well-curated samples reduce bias

- Cleaner datasets reduce hallucinations and failures

💡 Fun fact: OpenAI filtered and deduped massive parts of the web before training GPT-3. They even weighted better sources like Wikipedia more heavily. Smart.

🧰 So… Do I Need a Whole Data Team?

If you’re Google or Tesla? Probably.

But if you’re a growing company building AI-powered features, dataset engineering still matters — and you don’t need a 20-person team to pull it off.

💡 Enter: Mixpeek

That’s where Mixpeek comes in.

Mixpeek builds custom pipelines that:

✅ Ingest your videos, images, audio, text

✅ Extract rich features (objects, faces, speech, etc.)

✅ Filter, dedupe, and structure your data

✅ Deliver it as an indexed, searchable, clean dataset

✅ Continuously monitor for new data signals or failures

You tell us what insights or features you want, and we build the data engine around it.

🧪 Example: Want to search “customers interacting with shelves” in your store footage? Mixpeek can slice, filter, and index that behavior for you.

→ You focus on building. We handle the messy stuff.

🎯 TL;DR – Don’t Just Train Models. Train on the Right Data.

The next wave of AI innovation isn’t about who has the flashiest model.

It’s about who feeds their models the best-curated, highest-signal data.

Dataset engineers are quietly becoming the most valuable players in AI — and the smartest teams are the ones investing in them (or working with partners who do).

Want to see how Mixpeek helps you get there?

👉 Explore our dataset engineering solutions →

❓ FAQ: All About Dataset Engineering

🤔 What’s the difference between dataset engineering and data engineering?

Data engineering is usually about moving and storing data — think building pipelines, data warehouses, and ETL jobs for analytics.

Dataset engineering, on the other hand, is about preparing data specifically for machine learning. It includes things like:

- Choosing which data samples to include

- Labeling and annotating

- Normalizing formats across modalities

- Filtering bad or redundant examples

- Balancing datasets to avoid bias

- Setting up feedback loops to continuously improve the training data

If data engineering builds the plumbing, dataset engineering decides what flows through the pipes.

🧠 What does a dataset engineer actually do day to day?

It varies, but typically includes:

- Designing data curation workflows

- Writing scripts to clean, slice, and deduplicate data

- Creating labeling schemas and managing annotation pipelines

- Monitoring model performance to identify gaps in data

- Building and versioning datasets over time

- Coordinating with ML teams to align data structure with training needs

In smaller orgs, they often wear multiple hats — touching ML, infra, and product.

💼 What kinds of companies hire dataset engineers?

- AI startups building models in-house

- Autonomous vehicle companies

- Healthcare & biotech (e.g. medical imaging)

- AdTech and content platforms (e.g. video analysis)

- Retail & surveillance analytics

- Any company working with computer vision, NLP, or multimodal AI

In short, any org that trains models using unique data.

💰 What’s the salary of a dataset engineer?

It depends on experience and geography, but here’s a rough breakdown:

| Role Level | U.S. Salary Range (2024) |

|---|---|

| Entry-Level | $100K – $140K |

| Mid-Level (3–5 yrs) | $140K – $180K |

| Senior/Lead | $180K – $230K+ |

| Specialized (e.g. AV) | $250K+ total comp |

Startups may offer lower base but higher equity; big tech pays top dollar, especially in AI orgs.

📚 What skills do I need to become one?

Technical Skills:

- Python (NumPy, pandas, PyTorch/TensorFlow)

- Shell scripting and data tooling (e.g. ffmpeg, jq, boto3)

- Working knowledge of machine learning workflows

- Data storage formats (Parquet, HDF5, TFRecords)

- Familiarity with vector stores and retrieval systems (e.g. Qdrant, FAISS)

Soft Skills:

- Critical thinking: What’s valuable data? What’s noise?

- Communication: Syncing with ML teams, annotators, and PMs

- Data intuition: Spotting subtle imbalances or edge cases

🔁 Is this the same as data labeling?

Labeling is just one part of it.

Dataset engineers often design labeling workflows — but also:

- Choose which examples need labels

- Build active learning loops

- Manage data versions across experiments

- Optimize datasets for speed, balance, and signal

They’re closer to a machine learning engineer than an annotator.