Two Sides of the Ecosystem, One Platform

Built for both sides of the advertising ecosystem

Maximize Inventory Value

Enrich content inventory with contextual signals to attract premium demand

- Higher CPMs & Fill Rates

Rich contextual data attracts more competitive bids

- First-Party Data Monetization

Turn your content into valuable contextual signals

- Automated Content Classification

IAB taxonomy tagging at scale across all media types

- Brand Safety & Suitability

Protect inventory value with comprehensive content analysis

Real Impact: Publishers see 25-35% CPM increases with deep multimodal contextual signals in bid requests

Optimize Media Spend

Precision targeting with multimodal context—reduce waste, improve performance

- Higher Relevance, Lower CPA

Better context matching = better campaign outcomes

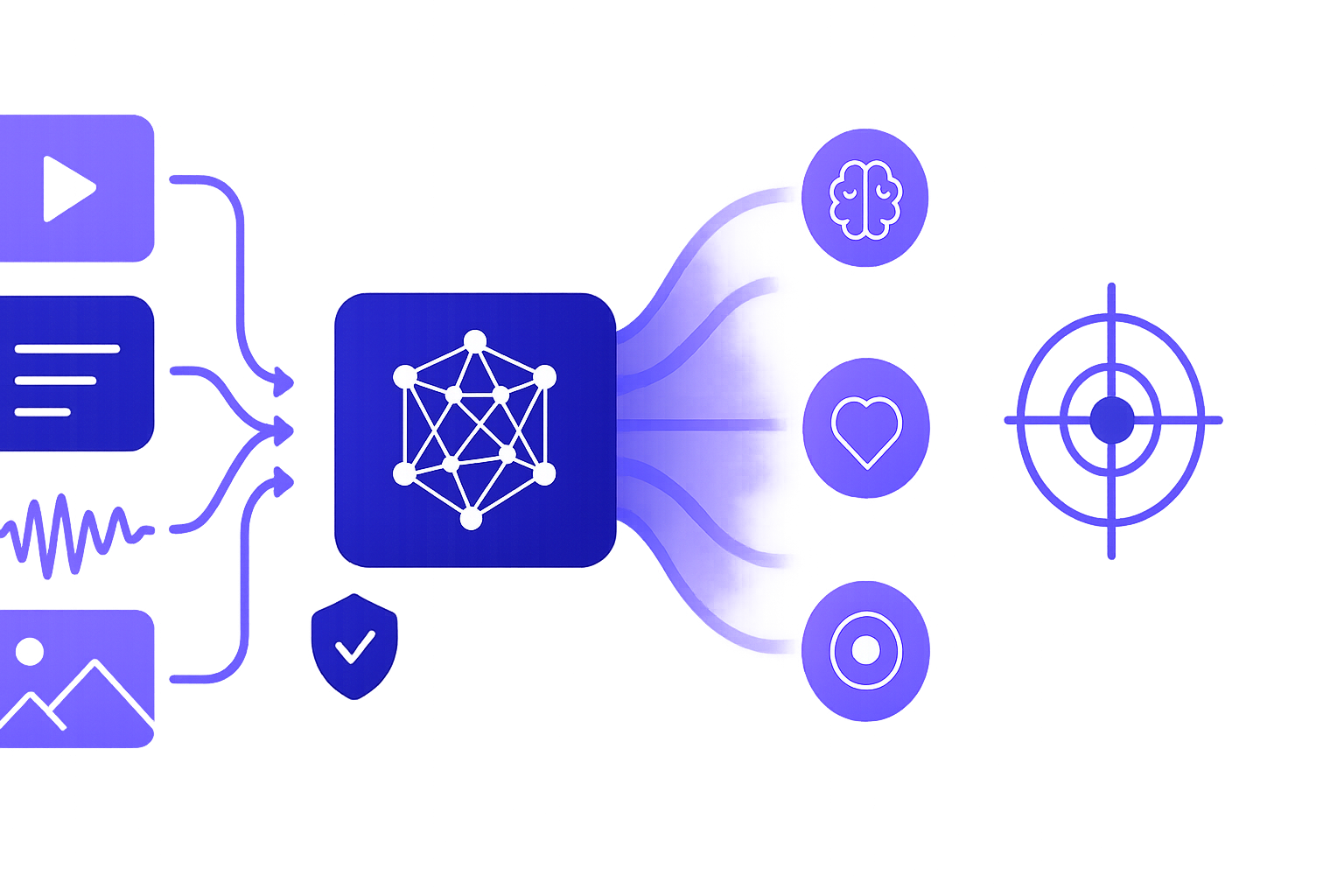

- Multimodal Content Understanding

Analyze images, video, audio—not just text metadata

- Brand Safety Verification

Verify placement suitability before serving ads

- Cookieless Performance

Maintain targeting quality without user tracking

Real Impact: DSPs report 40% improvement in contextual targeting accuracy and 60% reduction in brand safety incidents

Proven in Production

Real results from teams using Mixpeek for contextual intelligence

Across all media types compared to keyword-only targeting

Fewer wasted impressions on irrelevant or unsafe content

Users find relevant content across modalities in seconds

Video Publisher Increases CPMs by 32% with Rich Contextual Signals

A leading video publisher used Mixpeek to enrich inventory with IAB categories, sentiment, and visual context—attracting premium demand and increasing CPMs by 32%.

Leading DSP Improves Contextual Targeting Accuracy by 40%

A major DSP used Mixpeek's multimodal analysis to understand content beyond keywords—reducing mismatched placements by 60% and improving campaign performance by 40%.

Built for the Entire Advertising Ecosystem

Privacy-first contextual intelligence that performs without cookies—for publishers monetizing inventory and advertisers optimizing spend

Multimodal by Design

One pipeline for text, images, video, audio, PDFs—understand context across all content types.

Your Infrastructure, Your Rules

Build on your own storage and databases—complete control, infinite flexibility, zero vendor lock-in.

Better Relevance, Fewer Hacks

Hybrid retrieval + late-interaction models for fine-grained contextual matches.

API-First & Fast to Ship

Simple SDKs + webhooks to light up contextual targeting, search & recommendations.

How It Works

Three simple steps to activate multimodal contextual targeting

Ingest

Connect to S3 or upload files—automatic content detection and queuing.

Enrich

Extract embeddings, transcripts, OCR, and contextual features.

Activate

Query and retrieve ranked results with precise timecodes and segments.

Activate Contextual Intelligence Everywhere

From precision ad placement to content safety and semantic discovery

Precision Ad Placement

Place ads in brand-safe, relevant environments using multimodal content analysis.

- 70% increase in relevance across all media types

- Reduce wasted ad spend on misaligned placements

- Discover contextual opportunities competitors miss

Content Safety & Brand Protection

Ensure brand-safe placements with real-time content analysis across all modalities.

- Real-time content analysis across modalities

- Custom safety policies for your brand values

- Prevent issues before ads are placed

Semantic Content Discovery

Find frames in video, tables in PDFs, and moments in audio with intent-based search.

- Single search box across all content types

- Intent-based matching beyond keywords

- Reduced null results, faster time-to-answer

AI Agents with Real Context

Ground LLMs in precise segments—pages, frames, clips—for accurate, cited responses.

- RAG with receipts—precise segment retrieval

- Reduce hallucinations with grounded context

- Timecode & page-level citations

Powered by Industry-Standard Taxonomies

Industry-standard classification with IAB Content Taxonomy 3.0 and custom hierarchies

IAB Content Taxonomy 3.0

Industry-standard classification for consistent categorization across campaigns and the programmatic ecosystem.

- 700+ categories for precise classification

- Automatic migration from IAB 2.x to 3.0

- Industry-wide compatibility

Why Taxonomies Are Essential for Contextual Targeting

Consistency

Standardized categories ensure consistent classification across all your content and campaigns

Scale

Automate content classification at scale without manual tagging or intervention

Brand Safety

Categorize content for brand safety and suitability scoring based on your guidelines

Prebid.js Connector

Pass contextual signals directly into Prebid auctions as first-party data

Pass IAB categories, sentiment, and content features to bidders

Enhance bid requests without third-party cookies

Better targeting data = more competitive bids

// Add Mixpeek module to Prebidpbjs.addModule('mixpeek', {apiKey: 'your-api-key',features: ['iab', 'sentiment']});// Contextual data automatically// enriches bid requestspbjs.requestBids({// Your standard configadUnits: adUnits,bidsBackHandler: initAdserver});

Context Without Cookies, Insight Without Uploads

Like the best contextual ad platforms, but for your own multimodal data

Traditional Contextual Ad Tech

- ✗Limited to publisher networks

- ✗Black-box algorithms

- ✗No control over data or models

- ✗Primarily text & metadata-based

Mixpeek Multimodal Intelligence

- ✓Infrastructure you own and customize

- ✓Complete flexibility—adapt to your needs

- ✓No vendor lock-in, no black boxes

- ✓Text + images + video + audio + PDFs

Infrastructure you control. Flexibility you need.

Your storage, your databases, your rules—customize everything to fit your business exactly.

Built for Control, Performance & Privacy

Infrastructure designed for flexibility—customize and scale on your terms

Late-Interaction Retrieval

Fine-grained matching at the token level—not just document similarity. Get precise relevance for complex queries.

Read the deep-diveUse Your Database

Choose any database that fits your needs—Qdrant, pgvector, Supabase, or others. Complete flexibility to match your existing infrastructure.

View integrationsUse Your Storage

Connect to any S3-compatible storage—Amazon, Cloudflare R2, GCS, or others. Your data stays in your environment, under your control.

See docsFrequently Asked Questions

How do publishers benefit from multimodal contextual signals?

Publishers enrich inventory with IAB categories, sentiment, and visual context passed to bidders in real-time—increasing CPMs by 25-35% on average.

Can we keep data in our own cloud?

Yes. Use your own storage and databases with complete control—data never leaves your environment.

Which databases are commonly used?

Qdrant, pgvector, and Supabase Vector are the most popular. Production patterns and examples are documented in our integration guides.

Do you handle video deeply?

Yes—full support for chunking, scene detection, and timecode-level search within videos.

How is this better than "just text search"?

Multimodal embeddings find relevance in images, video, and audio that text-only search misses.

What makes Mixpeek different from contextual ad platforms like Seedtag?

Seedtag is a closed SaaS platform. Mixpeek is infrastructure you own—customize freely, use your own storage and databases, maintain full control.